Expanse Cabaret

Elijah and I (of ShowStages) were invited to add video to the Expanse Cabaret event at New City Legion for this year’s Expanse festival. The variety show was part of the Expanse Movement and Dance festival, Presented by Azimuth Theatre.

Elijah and I (of ShowStages) were invited to add video to the Expanse Cabaret event at New City Legion for this year’s Expanse festival. The variety show was part of the Expanse Movement and Dance festival, Presented by Azimuth Theatre.

The cabaret event featured a high-energy performance by Mark Mills, with a variety of dance and dramatic performances throughout the evening.

Setup

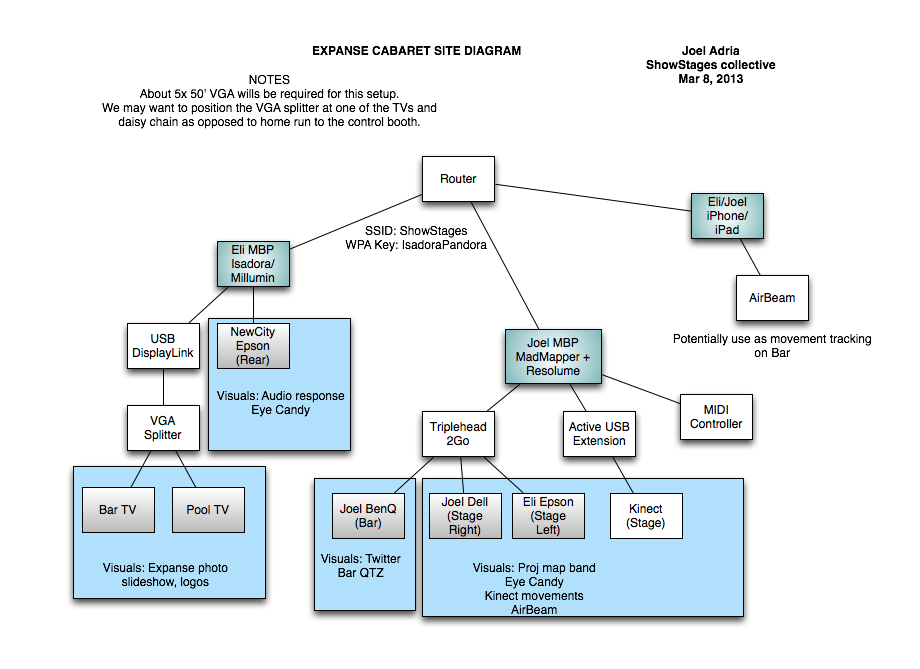

For outputs, we had access to New City’s existing gear, plus our own arsenal of projectors and equipment. This worked out to be:

- Two HDTVs installed on the bar and above the pool table

- A projector and screen mounted on one wall

- Two of my projectors (including a new Dell 5100MP I found on Kijiji)

- Eli’s Epson projector

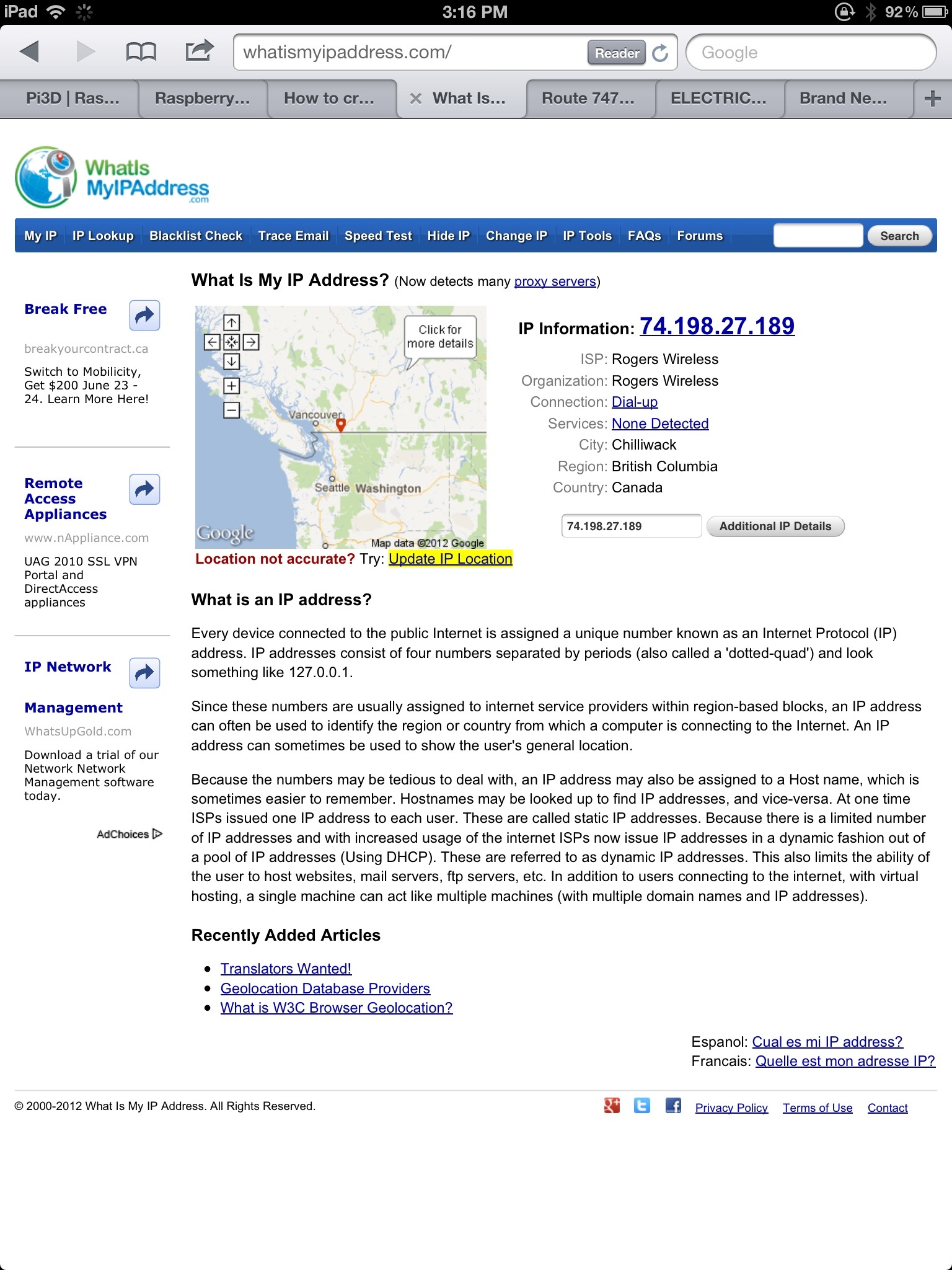

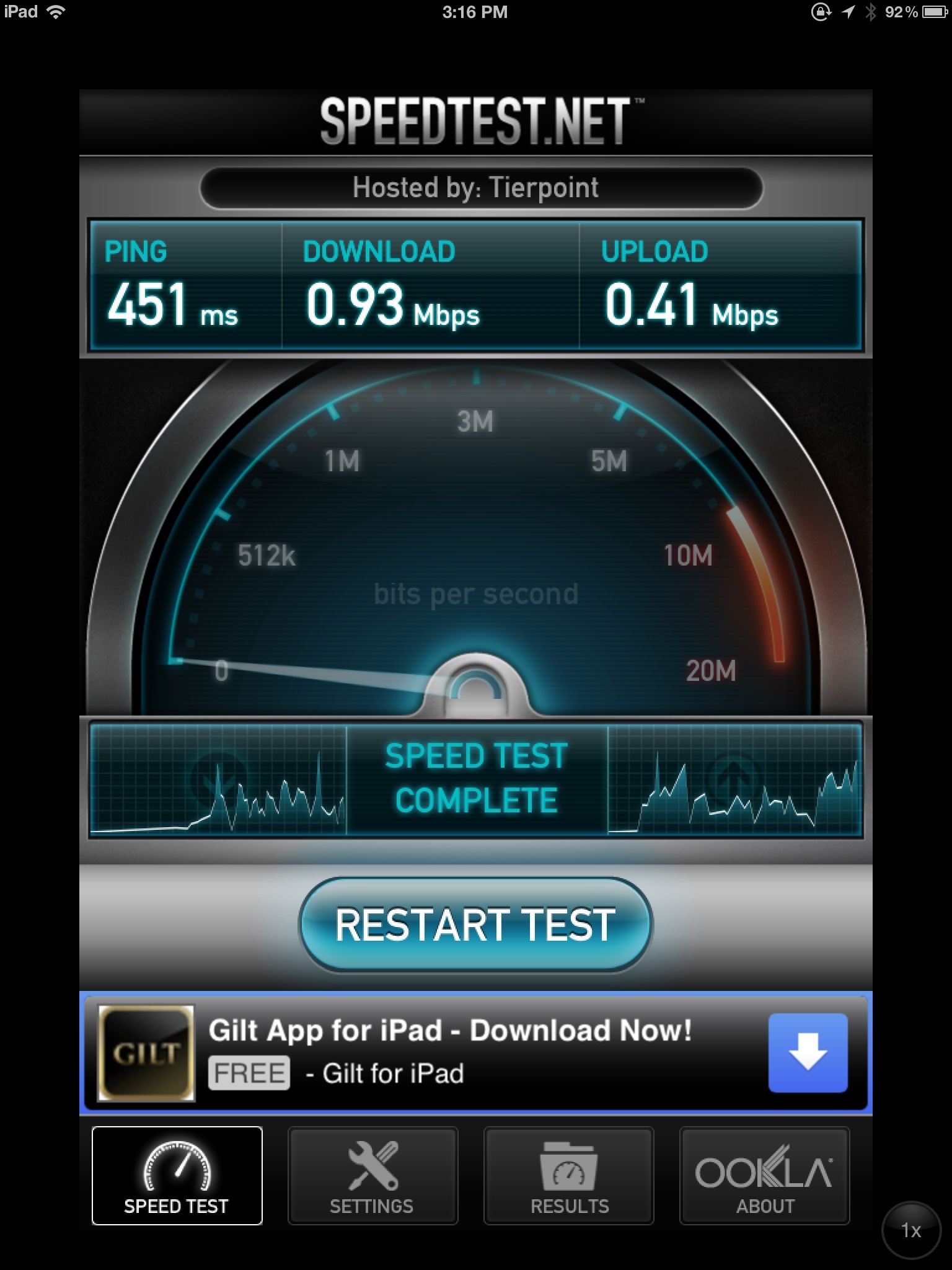

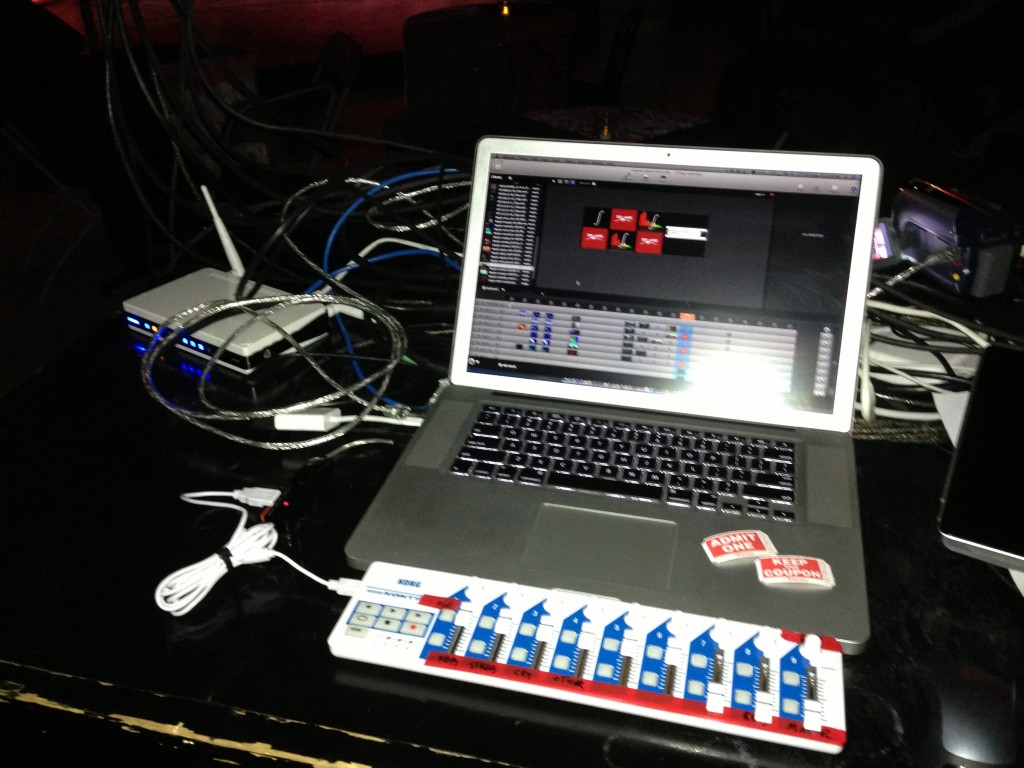

With that many outputs, we needed to stretch the capabilities of our two MacBook Pros, with just one output each. On my system, I ran our TripleHead2Go to power two projectors pointed cross-shot on the stage. The third output powered a projector near the bar that beamed a steady stream of tweets across the bar top.

On Eli’s machine, we ran the projection screen straight out of the MBP, and used my USB DisplayLink to get an extra out. We mirrored this channel to power both TVs with the same content. The USB adapter performed like a champ, with no glitches or noticeable lags with the content we were giving it.

We ran VGA for all sources, which took the bulk of our setup time. Hopefully we can work towards cutting this time down with VGA extenders or a road case rig.

Software

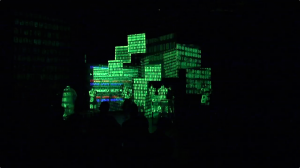

We really wanted to put our MadMapper rig to good use on this show. The angle that we projected the Twitter bar from was really awkward: a steep upside down diagonal. It wasn’t ideal, but it was a convenient placement and got the messages across. MadMapper got it setup in no time at all.

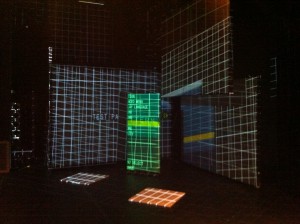

The other two projectors beamed onto each side of the stage. I mapped out a few amplifiers, the walls, and some picture frames we whited out with a few old posters. The response was great, with one person even asking if we had mounted screens in the walls. The low light combined with the decent res of the Dell made for a great map.

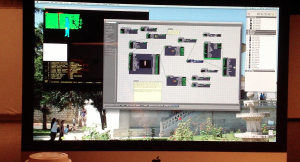

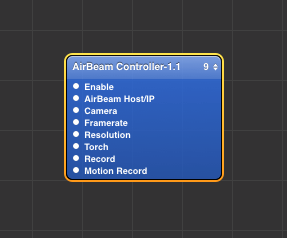

For playback, Eli took to our friend Isadora, running the TVs and screen as two stages. For a portion of the event, we were asked to include a remote performance by the Good Women Dance group, which was beamed in over FaceTime as they performed pieces in various locations along Whyte avenue. To maintain a clean look for the screen, we used a MaxMSP patch that siphoned the output of FaceTime into Isadora. It was a bit clunky, occasionally flashing the wrong portion of the screen when FaceTime auto-rotated with the remote iPhone. (We later found an alternative in VDMX, later…)

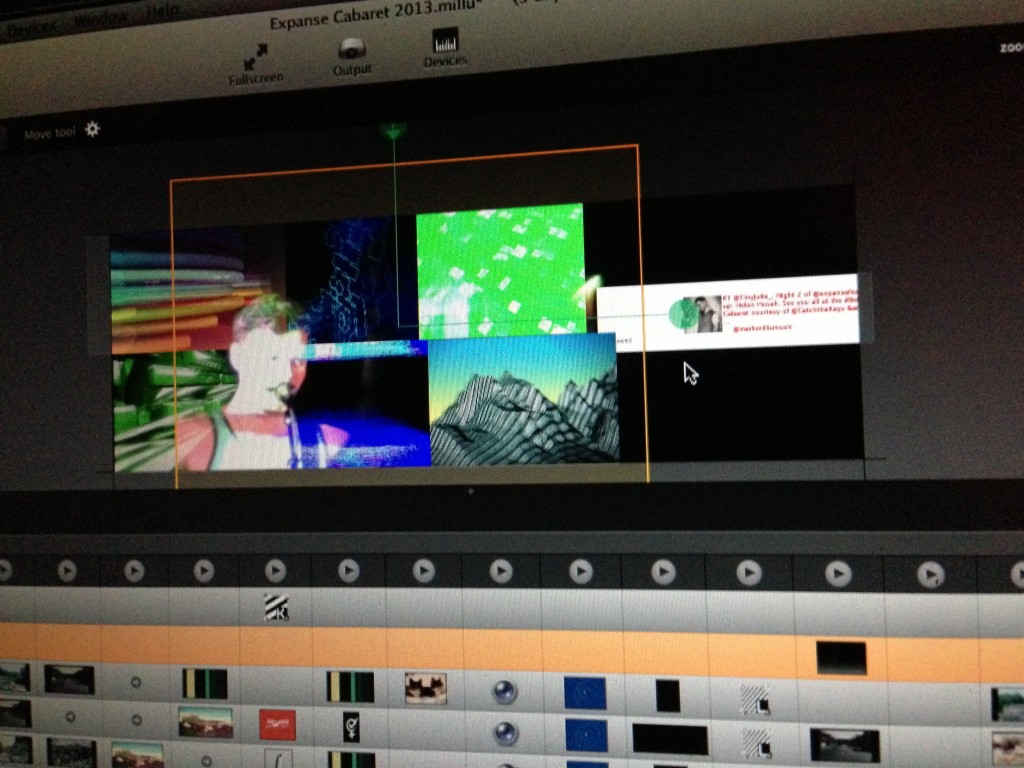

Originally I wanted to use Eli’s license of Resolume Avenue for playback on my computer, but not having too much experience with it, I opted to use the last few days of my Millumin trial for the event.

It was a real winner for its ability to arrange different setups and recall them quickly, while also being able to arrange the layers into 6 different media positions in MadMapper. It was also great at normalizing different content sizes, all while keeping the Twitter bar running on the side. It even brought in a live video feed from an old Mini DV camera I brought on a whim, which turned out to be a killer effect.

Where Millumin fell down was its inline effects (or lack thereof). There are a few built-in essentials, like color adjustment and soft crop that are great for everyday use. However, they aren’t easily adjustable on the fly for multiple layers unless you MIDI map each layer. Millumin’s author has assured me that custom effects will likely be in its way, as custom Quartz filters could be fairly easily implement in the video pipeline.

Callback

The wicked Dart Sisters of Catch the Keys Productions asked us to stick around for the second event of the Expanse festival Saturday night, featuring amazing spoke word artist C. R. Avery.

With a chance to do it all over again with a bit of a sense of what helped with a live video performance, I decided to grab a license of VDMX for the second night. I had used VDMX years ago before they redesigned it, and knew how powerful it could be for a setup like the Expanse Cabaret. The great folks at VIDVOX were prompt in getting me a student discount, and I was up and running for sound check.

I had some trouble getting the video layers to the right size and crop method that Millumin did so easily, but I marched into the darkness with VDMX and some revised video mapping areas for the new sets. It was a bit of a struggle to get where I wanted to go with VDMX, mostly because I hadn’t established much of a “rig”, which VDMX users are famous for building.

By the end of the night, I had discovered some need built in filters and effects, and was mixing in live video like I had in Millumin. Although we had no FaceTime to syphon in this night, VDMX has a great window capture system that allows you to grab any open (or hidden) window as a video source.

Next Steps

I’m looking forward to experimenting with VDMX, and building a custom rig for future events like this, and possible theatre applications.

With some great effects achieved with live video layering into our content, we’ll definitely be playing more with that.

We mostly used stock VJ clips for this event, but finding and creating clips for this purpose is definitely an area we’d like to look into. We had our kinect hooked up for the event, but found that it wasn’t always effective in a projection mapped environment. There may be others ways in which it can be applied though.

With the success of the twitter bar, we’ll be definitely seeing what other “set and forget” interactive experiences we can add to evening events.