DELETE Project

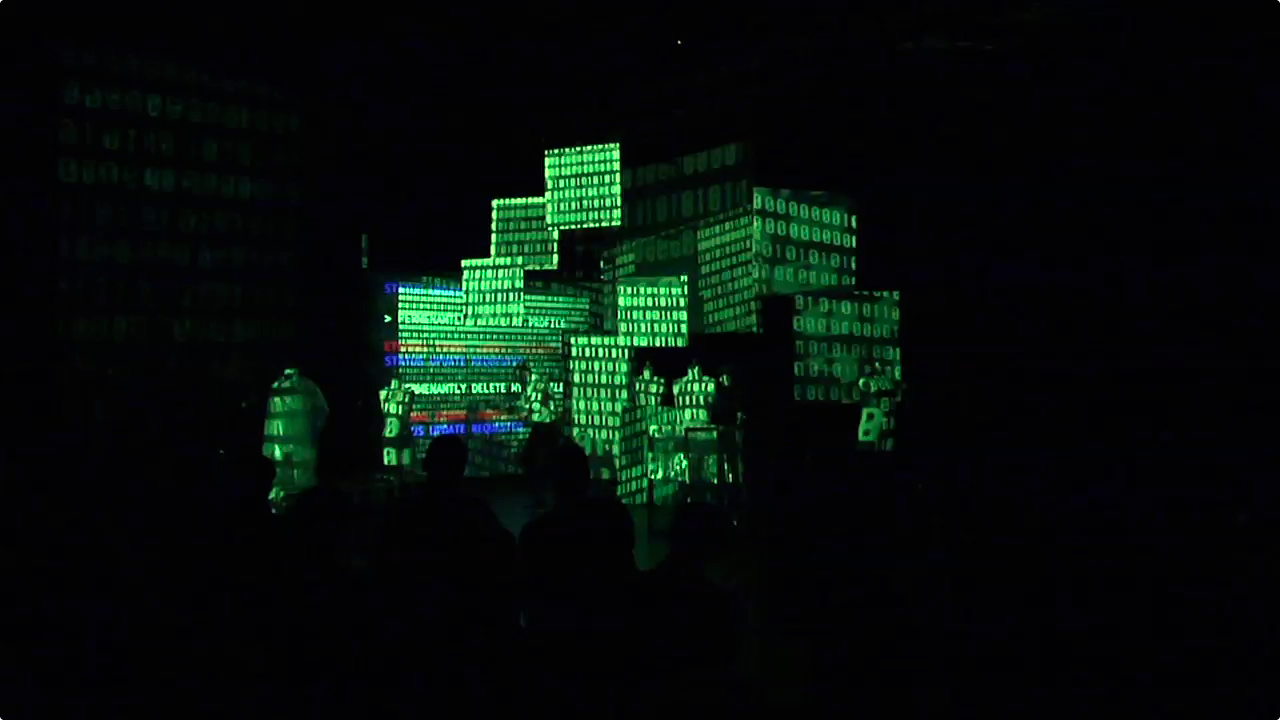

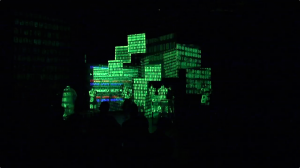

This summer I had the opportunity to work on a interactive video/theatre project entitled DELETE. The brainchild of Stefan Dzeparoski, DELETE tells the story of Konstantin, a man trapped within a digital world. Erin Gruber served as the media designer, and I served as a media consultant. The Canadian Centre for Theatre Creation provided funding and organized the project, which ocurred and was presented in the University of Alberta Second Playing Space. The presentation was incorporated into the StageLab experimental theatre series at the end of August. Here’s what the CCTC wrote about it:

This summer I had the opportunity to work on a interactive video/theatre project entitled DELETE. The brainchild of Stefan Dzeparoski, DELETE tells the story of Konstantin, a man trapped within a digital world. Erin Gruber served as the media designer, and I served as a media consultant. The Canadian Centre for Theatre Creation provided funding and organized the project, which ocurred and was presented in the University of Alberta Second Playing Space. The presentation was incorporated into the StageLab experimental theatre series at the end of August. Here’s what the CCTC wrote about it:

Delete examines the theme of what Dzeparoski calls “the eternal immigrant”. Its story depicts a man named Konstantin attempting to come to terms with a past that haunts him during the last hours before the end of the earth. Gifted with a perfect memory, he lives in a world of the not-too-distant future where everyone has come to rely on digital technology as a repository for past events. He too, has rejected his own memory in favour of the more conveniently selective digital version, but he cannot escape his gift and finds himself compelled to confront his loved ones before the clock runs out.

– Ian Leung for the CCTC, full article

Beginnings

Our first workshop in May 2012 explored the technologies we wanted to experiment with, together with the dramaturgical and visual thoughts we wanted to include. Our goal was to have ideas we could move forward in a week-long workshop process in August.

We played with the Microsft Kinect sensor using a variety of tools, determining that NI Mate provided the simplest and most flexible output out of the various software kits. Masking textures using a silhouette output was particularly intriguing, using a silhouette output from NI Mate into Isadora via Syphon. This could then be aligned to the actual human form in front of the Kinect, projecting the virtual Konstantin in and around the physical character on stage. Green screen footage shot of the character served as our source video, along with a collage of other images collected in a Dropbox before the workshop weekend.

We also experimented with flame and alphabetic imagery mapped to physical hands using skeletal data into Quartz Composer. This too was mapped to the human figure, which could wave around fire and blow letters into the screen.

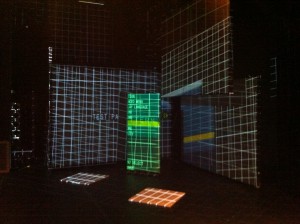

Projection mediums were also explored, both using both traditional screens and draped fabric, which would inspire the final design in August.

Equipment setup

When we reconvened in August, we had full access to the University of Alberta’s video equipment. Key components included:

- 4x Panasonic 5000 Lumen projectors

- One was used in an RP configuration

- One hung overhead mostly for mapping

- Two cross shot in wide angle to provide scenic texture to the space

- 8-core Mac Pro, dual graphics cards

- IsadoraCore

- NI Mate licensed Kinect

- Adobe CS

- AirBeam

This accompanied a full sound setup from Matthew Skopyk, and my own MacBook Pro, linked on a gigabit network.

Much of the video content needed to be produced before we could start experimenting on stage. We spent a day shooting in different locations on green screen and in real space. We shot video on a Panasonic HD camcorder straight into Final Cut Pro, logging video clips as we went. The batch export facilities allowed us to quickly split audio & video, key out green screen in batch, and convert them into Photo JPEG, an optimal codec for Isadora.

Tip: If you’re looking to key (green screen) out a number of clips at once using Final Cut, there’s an interesting trick to doing this. First, drag all the clips you want to process into a sequence. Apply the Key effect onto all the clips in the sequence, using Copy & Paste Settings… or similar. Then, drag these clips out of the sequence into a new media bin. These are now new independent clips with the effects applied to them, and can be Batch Exported out of Final Cut in the traditional fashion.

AirBeam

One discovery that we immediately incorporated into the project in August was the AirBeam wireless camera software. An app for iOS & OS X, AirBeam allows video to be streamed over the network to any Mac, iOS Device, or supported Web Browser. On the Mac side, the client can enable Syphon throughput, enabling a quick and simple low-latency on-stage video source straight into Isadora or any other Syphon-enabled video application. It’s completely wireless, and lasts at least an hour on battery.

This proved a hit for the project, and was used throughout the show. My iPhone was placed in different positions, handheld and on set pieces. I wrote a custom Quartz/Isadora patch called AirBeam Controller that allowed us to enable/disable the flashlight, select front/back camera and more. We dropped the actor in each Isadora scene with the right settings, and the iPhone was always in the right configuration when we needed it.

One thing we did notice was that the battery drained quite quickly on the iPhone. I had an external battery pack, but still we needed to make sure it was being charged when on breaks during the workshop process. I suggested to the developers of AirBeam that a battery meter be integrated into the monitoring software for this exact purpose, which they gladly accepted.

We also placed Erin’s iPad in the grid of to use as a bird’s eye view of the scene. This provided a second AirBeam camera source into the setup at some points during the performance.

Lady Isadora

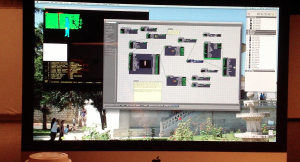

Being relatively new to Isadora, I definitely learned some hard lessons about the architecture of the program in developing our setup for DELETE. We mostly relied on the “classic” actors in Isadora, as they provided convenient access to the 3D Quad Distort actor, an essential for mapping video elements onto the hung “picture frame” elements in the physical space. We had access to the Core Image actors, but they didn’t provide an intuitive enough way to map video elements to our set.

Some of the classic actors, however, proved extremely CPU-intensive for the number of outputs and mappings we were performing. We diagnosed some of the delays in processing, but even still, Isadora had a hard time keeping up. I learned that the Isadora video core is largely CPU based, as it was designed in a time when that was the best way to achieve reliable effects on different platforms. As a result, all video processing must occur within a single thread through the CPU before being displayed. Multi-threaded video playback is a beta feature, but was not stable enough for our needs.

This dropped our framerates down to 8-12 fps, which wasn’t acceptable. We optimized scenes where we could, however we ultimately needed to render out several scenes using the built-in Isadora facilities for our performance to improve playback.

In the future, I would definitely look into better options for video mapping (I’m already playing with my own copy of MadMapper these days), and also make sure that the video actors we are working with are reliable and low CPU-usage. Core Image actors are obviously ideal, but they don’t always provide matching functionality to the Isadora ones.

Process

One great thing about working with Stefan on this project was how important the video elements was to the story. We easily spent 75% of our time working through visual effects, video story telling, and experimenting with new technologies. This allowed the video to truly serve as a dramaturgical element, something that is difficult to achieve when the time and ressources are not as abundant in an ordinary theatrical production. It certainly serves as a great example to me of how effective video can be when it is part of the development process from all angles.

Matthew Skopyk provided the sound design for DELETE, who eagerly integrated audible elements with our visuals. We provided Matt with all of the audio tracks to the video we recorded, allowing him to process and modify them. We then triggered the sound cues over OSC from Isadora, playing back the tracks in Matt’s Ableton Live setup. In May, we also experimented with synchronizing intended audio “glitching” triggering simulatneous video “glitching”, to create the illusion that virtual Konstantin was fading away into the virutal world.

Outcomes

The single performance of the show attracted a large audience into the SPS, who responded well to the 25 minute piece. It was great to use the Kinect & AirBeam on stage in such effective ways and with a team who was genuinely interested in integrating it into the piece.

The project served as a catalyst to the creation of the ShowStages video collective, consisting of Erin Gruber, Joel Adria, and Elijah Lindenberger, interested in pursuing these kinds of projects further.

Be sure to check out more images from the project in the gallery below, and listen to the podcast episode recorded immediately after the show.